Probabilistic Topic Models and Latent Dirichlet Allocation: Part 1

A walkthrough for programmatically identifying topics from The Federalist Papers.

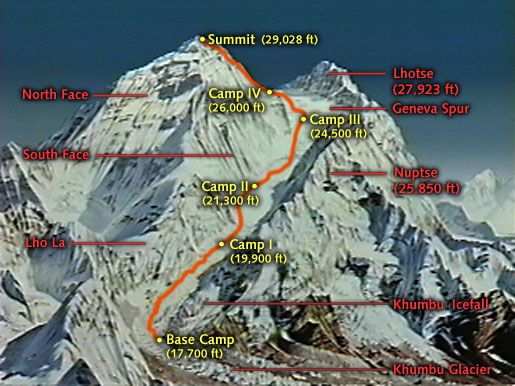

Data Science Altitude for This Article: Camp Two.

For our next post, we tackle something a little more difficult than in the past posts: the use of Probabilistic Topic Modeling for thematic assessment in literature. Got a lot of documents that you’re trying to condense down into manageable themes? From an academic perspective, you might be interested in thematic constructs in one or more of Mark Twain’s works, perhaps. Or from a socio-political perspective - and something a little more present-day - you might want to identify recurring themes in political speeches and see how they track among candidates for a particular office.

Here, we’ll walk through a specific example and break down all the components necessary. Our subject matter will be the 85-article compendium The Federalist Papers written by Alexander Hamilton, James Madison and John Jay under the nom-de-plume ‘Publius’ to promote the ratification of the U.S. Constitution. We’ll be pulling it directly from the Project Gutenberg website, home of nearly 60,000 free books and some of the world’s greatest literature.

Before we get started, a little bit of homework for you: given what you know about the U.S. Constitution (and in the formation of democracies or republics worldwide), what three or four topics would you expect to see someone use to promote it? What would you consider the definition of what it is to comprise a ‘topic’, both in the context of the Federalist Papers and in general? Keep those answers in mind, we’ll get to them both…

Some Definitions and Stage-Setting…

Latent Dirichlet Allocation (LDA) as applied here stems from the groundbreaking 2003 paper authored by David Blei, Andrew Ng, and Michael I. Jordan, followed up a decade later in the Communications of the ACM (if you follow this link, the page has a ‘View as PDF’ option that renders it much more readable). To summarize it at a really high level, they consider topics as the distribution of words throughout documents in a collection. Multiple topics are going to comprise any grouping of collected documents. Words that consistently fall together become themes.

Those of you that have been down this road before might recognize LDA as an acronym for something else: Linear Discriminant Analysis. It’s used to find linear combinations of features that separate two or more classes of objects, along with its non-linear cousin, Quadratic Discriminant Analysis. A topic for another day, definitely…

Our process is going to look something like this: First, you’ve got to clean the data and then put it in a format conducive to such an effort. Luckily, R has a solid toolset to utilize. The R tidyverse, specifically the stringr and dplyr packages. We’ll borrow from those a little bit.

The tm package is pretty decent for building what is called a text corpus and we’ll be using that in depth here. My only issue with it is that I have found it on several occasions to not always observe backward compatibility. While it could be user error on my part, I don’t think so. I’ll go over one example I’ve encountered as we proceed. Encountering backward compatibility issues is a post in itself and I think I’ll do one later on that topic.

SnowballC will be used for ‘stemming’ words and taking them down to their root form. For example, ‘vibrate’, ‘vibrating’ and ‘vibration’ should all be considered as three instances of the root word ‘vibrat’ for purposes of thematic assessment. SnowballC takes documents and does that conversion for us.

As far as Python is concerned, the handling of unstructured text is covered by the natural language processing package spaCy. It looks to be very well-done but it - as well - is a discussion for another day. I need to do some further digging in on it.

Then, you’ve got to run the LDA statistical methods that form the topic models. Heavy use will be made of the topicmodel package, where we’ll be calling the lda() method. We’ll go into more detail on the specifics of it after the post on cleaning the data. Then, we’ll assess the results, and possibly revisit our assumptions.

Finally, when satisfied with your methodology, visualize them so that others can more easily understand the relationships and/or patterns you’ve found. Again, R to the rescue. We’ll dive into the LDAvis package, a very nice Shiny app that allows a visual consideration of the topics and their composition.

Upcoming Post Roadmap

So, to reiterate, our roadmap for the next several posts is:

- Cleaning and formatting the data.

- A deeper dive into LDA methodology.

- Results of our first pass, with revisions.

- Visualizing results and identifying revisions and enhancements.

- Code summary - I’ll make it available to you all in one place in the final post. No sense trying to piece parts of it together through multiple posts.

Packages and versions used, if you want to try this at home. If in doubt as to how, be sure to follow the install process noted in the post where we installed RStudio.

## [1] "tm: 0.7.6"

## [1] "dplyr: 0.8.1"

## [1] "stringr: 1.4.0"

## [1] "SnowballC: 0.6.0"

## [1] "topicmodels: 0.2.8"

## [1] "LDAvis: 0.3.2"Further Information on the Subject:

Here’s a good YouTube summation of LDA concepts and components from Scott Sullivan.

And another use of LDA from the folks at NASA’s Jet Propulsion Laboratory.

One of the co-authors on the paper referenced earlier is Andrew Ng, a co-founder at Coursera. Coursera is one of the leading online learning tools for the Data Science community. If you’re not already familiar with their offerings, check them out!