Probabilistic Topic Models and Latent Dirichlet Allocation: Part 2

Data Cleaning. A dirty job, but someone has to do it. Yes, I'm talking to you...

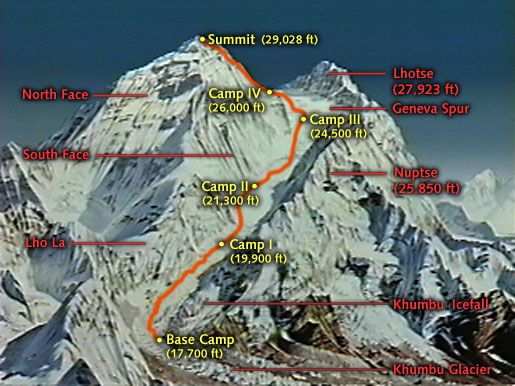

Data Science Altitude for This Article: Camp Two.

Our last post set the stage for what it’s going to take for us to end up at our desired conclusion: a programmatic assessment of topics gleaned from The Federalist Papers.

Before we can throw some fancy mathematics at the subject matter, we have to get the data to the point where it’s conducive to analysis. In this era of data coming at us from multiple sources and in structured and unstructured formats, we have to be versatile in our coding skills to deal with data in whatever manner it comes. We also - and maybe more importantly - have to employ a circumspect approach… Where does our data come from? Are there any general or situational problems with its validity? Are there any barriers to its usefulness that we have to address?

The real world doesn’t often gift-wrap pre-formatted data for us to easily apply to models in our testing methodology. Especially thorny are the challenges that accompany unstructured data. It’s up to us to turn the proverbial raw block of granite into a chiseled statue. We’ll do that here with a small assist from the stringr package that enhances the base code of R.

Accessing Data in The Federalist Papers

As we discussed in the prior post, the data is on the Project Gutenberg website. While you certainly could pull it down and stash it in a directory, that’s not necessary. The code below will grab it directly and put it in a vector for you.

NOTE: Using the scan() and url() commands like this will work fine for one-time data grabs, but for multiple pulls of files from the same location, connection management is a nicer and less resource-intensive way to go. In other situations where you’re accessing structured databases, it’s crucial. I’ll show both ways of doing it but I’d like to emphasize going with the cleaner approach. In general, showing efficient coding practices speaks well to both your skill set and your mindset. Wastefulness stinks…

Ensure that the libraries that we’ll need for the entirety of the project are loaded and we’ll create our text vector of the Federalist Papers as one unstructured entity. When you run this, you may see some warnings regarding package versions and functions in the libraries you loaded. These will supersede other similarly-named functions in the base R code. You can ignore those for now.

library(tm)

library(dplyr)

library(LDAvis)

library(SnowballC)

library(topicmodels)

library(stringr)

# Connections - not this way:

# text.v <- scan(url("https://www.gutenberg.org/files/18/18.txt"), what = "character", sep = "\n")

# Instead, this way:

con <- url("https://www.gutenberg.org/files/18/18.txt")

open(con, "r")

text.v <- readLines(con, n = -1)

close(con)Let’s browse a small section of the text vector. Doing so gives us a quick feel for a subset of the issues we’ll encounter and will need to address.

- Whitespace characters exist (null lines).

- One line does not equal a sentence.

- Metadata (data about the data) is intermingled with the literary work.

text.v[1:7]

## [1] "The Project Gutenberg EBook of The Federalist Papers, by "

## [2] "Alexander Hamilton and John Jay and James Madison"

## [3] ""

## [4] "This eBook is for the use of anyone anywhere at no cost and with"

## [5] "almost no restrictions whatsoever. You may copy it, give it away or"

## [6] "re-use it under the terms of the Project Gutenberg License included"

## [7] "with this eBook or online at www.gutenberg.net"Purging the Metadata

So, where does the metadata start? we’ll have to take a look a little further down the text. Also, there’s a bunch of metadata at the tail of the text vector as well. Try out the following code segments (I’m suppressing the results here), you should be able to tell where the metadata starts and ends. These commands will also temporarily suppress for you the whitespace we’ll be removing later:

head(text.v[text.v != ""], 20)

tail(text.v[text.v != ""], 400)The text segments to look for are as follows, and are found at the following locations:

# Determine metadata boundaries

start.v <- which(text.v == "FEDERALIST. No. 1")

end.v <- which(text.v == "End of the Project Gutenberg EBook of The Federalist Papers, by ")

sprintf("Metadata goes from lines 1 to %d and from lines %d to the end (%d).", start.v, end.v, length(text.v))

## [1] "Metadata goes from lines 1 to 44 and from lines 25615 to the end (25976)."

# Replace the text vector with the portion that is just the literary body.

text.v <- text.v[c((start.v):(end.v-1))]Great, so no more metadata. Right? Not exactly… Let’s look again at the text vector.

Notice that we have the title of the paper (Federalist #1) and more explanatory text for the next several lines. Cruising down through the rest of the data, each paper has several of those kinds of lines (General Introduction, etc.). Looks like we only got the metadata associated with the Gutenberg Project’s efforts.

You could make the case that chapter delimiters and explanatory information in each paper are part of the metadata as well. Here, it seems that’s the case. However, that may not necessarily be true when analyzing other works. As such, it looks like we have some more entries to purge before we can consider the remaining words as identifying the thematic material. We’ll do that here in just a bit, as we first need to use occurrences of the capitalized FEDERALIST as chapter boundaries…

head(text.v[text.v != ""], 7)

## [1] "FEDERALIST. No. 1"

## [2] "General Introduction"

## [3] "For the Independent Journal."

## [4] "HAMILTON"

## [5] "To the People of the State of New York:"

## [6] "AFTER an unequivocal experience of the inefficacy of the"

## [7] "subsisting federal government, you are called upon to deliberate on"This next section of code came about when I was much further along with what will be in the next post… I noticed some incorrect words that were created due to their being split by double hyphens.

Those of you coming over from the Linux/Unix world are no stranger to the grep() command used below for pattern matching. That’s a whole other post in itself. The str_replace_all() function comes from the stringr package we briefly mentioned earlier.

So, respectable–the isn’t a word. The two need to be separated. I replaced all the occurrences of double hyphens in the text vector with spaces in order to force separation.

sprintf("There are %d occurrences of double hyphens between words",length(grep("--",text.v)))

## [1] "There are 31 occurrences of double hyphens between words"

# For example:

head(text.v[grep("--",text.v)],3)

## [1] "at least, if not respectable--the honest errors of minds led astray"

## [2] "people--a people descended from the same ancestors, speaking the same"

## [3] "to offices under the national government,--especially as it will have"

text.v <- str_replace_all(text.v, "--", " ")Identifying Where Document Breakpoints Exist

Remember from our earlier post that Latent Dirichlet Allocation assumes that documents have more than one topic and uses the word distribution between and among them to identify the words they consist of? Now we’re at the point of identifying where to break the text vector into those very documents. We also could finish off our cleaning efforts, but let’s get this task underway now rather than later.

Each chapter starts with a capitalized FEDERALIST No. ##, and we need to identify those locations before it gets taken to lowercase, which we will have to do with everything in our next post. If we had waited until afterward, any usage of that word in the sense of describing a federal form of government would be mixed in with the chapter headings. We’d lose our easily-divisible boundary between papers.

So, we’ll create a new vector that identifies the starting line position for each new chapter. But wait - there are 85 papers, and we get a vector that has a length of 86. What’s the deal? To borrow from the language of baseball, we’ve been thrown a curveball…

At the bottom of Federalist # 70, we get the following note: There are two slightly different versions of No. 70 included here. After looking at the Project Gutenberg version, sure enough… Even here, our earlier cautionary tale about being circumspect with the quality of our data pays off. Data pollution lurks behind every corner. We’ll get rid of one of the chapter 70s in its entirety.

Our final command below shows which lines are the beginning of our chapters, and now the vector is 85 in length.

# Determine starting positions of chapters

chap.positions.v <- grep("^FEDERALIST*", text.v)

# Huh?

length(chap.positions.v)

## [1] 86

# Two chapter 70s...

text.v[chap.positions.v[c(69:72)]]

## [1] "FEDERALIST No. 69" "FEDERALIST No. 70" "FEDERALIST No. 70"

## [4] "FEDERALIST No. 71"

# Removing the first Chapter 70 and re-genning the chapter positions.

start70a <- chap.positions.v[70]-1

end70a <- chap.positions.v[71]-1

text.v <- text.v[-c(start70a:end70a)]

chap.positions.v <- grep("^FEDERALIST*", text.v)

head(chap.positions.v)

## [1] 1 183 373 550 746 914

length(chap.positions.v)

## [1] 85Our (Last?) Bit of Tidying Up the Data

We’re now left with removing the intra-document metadata (General Introduction, etc., as noted earlier). Determining the text to remove was pretty much a repetitive effort involving a read-through of the text vector until left with only topical information. Here goes, including a little bit of regex pattern matching:

This includes:

- Any line that has Independent Journal anywhere in it.

- Likewise with Daily Advertiser and New York Packet.

- McLEAN, spelled with either a lowercase or capital C.

- 1787, where the period is treated as a true period and not as every character but a newline.

- Lines that have nothing but To the People of the State of New York and General Introduction.

# Remove more intra-document metadata and re-gen chapter start locations

indJournal <- grep("*Independent Journal*", text.v)

dailyAdv <- grep("*Daily Advertiser*", text.v)

nyPacket <- grep("*New York Packet*", text.v)

mcLean <- grep("*M[cC]LEAN*", text.v)

text1787 <- grep("1787[.]", text.v)

toThePeople <- grep("To the People of the State of New York", text.v)

generalIntro <- grep("General Introduction", text.v)

removeMeta <- c(indJournal, nyPacket, dailyAdv, mcLean, text1787, toThePeople, generalIntro)

text.v <- text.v[-c(removeMeta)]

chap.positions.v <- grep("^FEDERALIST*", text.v)In the Next Post

You may have noticed that I’ve left the authors’ names (Jay, Hamilton, Madison) in the text. I’ll get rid of those but will do so in the next post in order to illustrate some upcoming functionality when working with a special object called a corpus.

We’re nearly done with Data Cleaning. It’s time to format it into something we can better manipulate. Up next, tm and SnowballC.

Further Information on the Subject:

I can recommend a very good text by Matthew Jockers on the intersection of R, text analysis, and literature. If interested, a solid grounding in statistical methods will serve the reader well.